Data Science and Empirical Discovery: A New Discipline Pioneering a New Analytical Method

One of the essential patterns of science and industry in the modern era is that new methods for understanding — what I’ll call sensemaking from now on — often emerge hand in hand with new professional and scientific disciplines. This linkage between new disciplines and new methods follows from the deceptively simple imperative to realize new types of insight, which often means analysis of new kinds of data, using new techniques, applied from newly defined perspectives. New viewpoints and new ways of understanding are literally bound together in a sort of symbiosis.

One familiar example of this dynamic is the rapid development of statistics during the 18th and 19th centuries, in close parallel with the rise of new social science disciplines including economics (originally political economy) and sociology, and natural sciences such as astronomy and physics. On a very broad scale, we can see the pattern in the tandem evolution of the scientific method for sensemaking, and the codification of modern scientific disciplines based on precursor fields such as natural history and natural philosophy during the scientific revolution.

Today, we can see this pattern clearly in the simultaneous emergence of Data Science as a new and distinct discipline accompanied by Empirical Discovery, the new sensemaking and analysis method Data Science is pioneering. Given its dramatic rise to prominence recently, declaring Data Science a new professional discipline should inspire little controversy. Declaring Empirical Discovery a new method may seem bolder, but when we with the essential pattern of new disciplines appearing in tandem with new sensemaking methods in mind, it is more controversial to suggest Data Science is a new discipline that lacks a corresponding new method for sensemaking. (I would argue it is the method that makes the discipline, not the other way around, but that is a topic for fuller treatment elsewhere)

What is empirical discovery? While empirical discovery is a new sensemaking method, we can build on two existing foundations to understand its distinguishing characteristics, and help craft an initial definition. The first of these is an understanding of the empirical method. Consider the following description:

“The empirical method is not sharply defined and is often contrasted with the precision of the experimental method, where data are derived from the systematic manipulation of variables in an experiment. …The empirical method is generally characterized by the collection of a large amount of data before much speculation as to their significance, or without much idea of what to expect, and is to be contrasted with more theoretical methods in which the collection of empirical data is guided largely by preliminary theoretical exploration of what to expect. The empirical method is necessary in entering hitherto completely unexplored fields, and becomes less purely empirical as the acquired mastery of the field increases. Successful use of an exclusively empirical method demands a higher degree of intuitive ability in the practitioner.”

Data Science as practiced is largely consistent with this picture. Empirical prerogatives and understandings shape the procedural planning of Data Science efforts, rather than theoretical constructs. Semi-formal approaches predominate over explicitly codified methods, signaling the importance of intuition. Data scientists often work with data that is on-hand already from business activity, or data that is newly generated through normal business operations, rather than seeking to acquire wholly new data that is consistent with the design parameters and goals of formal experimental efforts. Much of the sensemaking activity around data is explicitly exploratory (what I call the ‘panning for gold’ stage of evolution – more on this in subsequent postings), rather than systematic in the manipulation of known variables. These exploratory techniques are used to address relatively new fields such as the Internet of Things, wearables, and large-scale social graphs and collective activity domains such as instrumented environments and the quantified self. These new domains of application are not mature in analytical terms; analysts are still working to identify the most effective techniques for yielding insights from data within their bounds.

The second relevant perspective is our understanding of discovery as an activity that is distinct and recognizable in comparison to generalized analysis: from this, we can summarize as sensemaking intended to arrive at novel insights, through exploration and analysis of diverse and dynamic data in an iterative and evolving fashion.

Looking deeper, one specific characteristic of discovery as an activity is the absence of formally articulated statements of belief and expected outcomes at the beginning of most discovery efforts. Another is the iterative nature of discovery efforts, which can change course in non-linear ways and even ‘backtrack’ on the way to arriving at insights: both the data and the techniques used to analyze data change during discovery efforts. Formally defined experiments are much more clearly determined from the beginning, and their definition is less open to change during their course. A program of related experiments conducted over time may show iterative adaptation of goals, data and methods, but the individual experiments themselves are not malleable and dynamic in the fashion of discovery. Discovery’s emphasis on novel insight as preferred outcome is another important characteristic; by contrast, formal experiments are repeatable and verifiable by definition, and the degree of repeatability is a criteria of well-designed experiments. Discovery efforts often involve an intuitive shift in perspective that is recountable and retraceable in retrospect, but cannot be anticipated.

Building on these two foundations, we can define Empirical Discovery as a hybrid, purposeful, applied, augmented, iterative and serendipitous method for realizing novel insights for business, through analysis of large and diverse data sets.

Let’s look at these facets in more detail.

Empirical discovery primarily addresses the practical goals and audiences of business (or industry), rather than scientific, academic, or theoretical objectives. This is tremendously important, since the practical context impacts every aspect of Empirical Discovery.

‘Large and diverse data sets’ reflects the fact that Data Science practitioners engage with Big Data as we currently understand it; situations in which the confluence of data types and volumes exceeds the capabilities of business analytics to practically realize insights in terms of tools, infrastructure, practices, etc.

Empirical discovery uses a rapidly evolving hybridized toolkit, blending a wide range of general and advanced statistical techniques with sophisticated exploratory and analytical methods from a wide variety of sources that includes data mining, natural language processing, machine learning, neural networks, bayesian analysis, and emerging techniques such as topological data analysis and deep learning.

What’s most notable about this hybrid toolkit is that Empirical Discovery does not originate novel analysis techniques, it borrows tools from established disciplines such information retrieval, artificial intelligence, computer science, and the social sciences. Many of the more specialized or apparently exotic techniques data science and empirical discovery rely on, such as support vector machines, deep learning, or measuring mutual information in data sets, have established histories of usage in academic or other industry settings, and have reached reasonable levels of maturity. Empirical discovery’s hybrid toolkit is transposed from one domain of application to another, rather than invented.

Empirical Discovery is an applied method in the same way Data Science is an applied discipline: it originates in and is adapted to business contexts, it focuses on arriving at useful insights to inform business activities, and it is not used to conduct basic research. At this early stage of development, Empirical Discovery has no independent and articulated theoretical basis and does not (yet) advance a distinct body of knowledge based on theory or practice. All viable disciplines have a body of knowledge, whether formal or informal, and applied disciplines have only their cumulative body of knowledge to distinguish them, so I expect this to change.

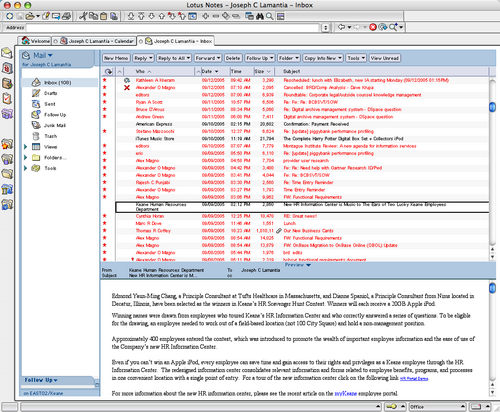

Empirical discovery is not only applied, but explicitly purposeful in that it is always set in motion and directed by an agenda from a larger context, typically the specific business goals of the organization acting as a prime mover and funding data science positions and tools. Data Science practitioners effect Empirical Discovery by making it happen on a daily basis – but wherever there is empirical discovery activity, there is sure to be intentionality from a business view. For example, even in organizations with a formal hack time policy, our research suggests there is little or no completely undirected or self-directed empirical discovery activity, whether conducted by formally recognized Data Science practitioners, business analysts, or others.

One very important implication of the situational purposefulness of Empirical Discovery is that there is no direct imperative for generating a body of cumulative knowledge through original research: the insights that result from Empirical Discovery efforts are judged by their practical utility in an immediate context. There is also no explicit scientific burden of proof or verifiability associated with Empirical Discovery within it’s primary context of application. Many practitioners encourage some aspects of verifiability, for example, by annotating the various sources of data used for their efforts and the transformations involved in wrangling data on the road to insights or data products, but this is not a requirement of the method. Another implication is that empirical discovery does not adhere to any explicit moral, ethical, or value-based missions that transcend working context. While Data Scientists often interpret their role as transformative, this is in reference to business. Data Science is not medicine, for example, with a Hippocratic oath.

Empirical Discovery is an augmented method in that it depends on computing and machine resources to increase human analytical capabilities: It is simply impractical for people to manually undertake many of the analytical techniques common to Data Science. An important point to remember about augmented methods is that they are not automated; people remain necessary, and it is the combination of human and machine that is effective at yielding insights. In the problem domain of discovery, the patterns of sensemaking activity leading to insight are intuitive, non-linear, and associative; activites with these characteristics are not fully automatable with current technology. And while many analytical techniques can be usefully automated within boundaries, these tasks typically make up just a portion of an complete discovery effort. For example, using latent class analysis to explore a machine-sampled subset of a larger data corpus is task-specific automation complementing human perspective at particular points of the Empirical Discovery workflow. This dependence on machine augmented analytical capability is recent within the history of analytical methods. In most of the modern era — roughly the later 17th, 18th, 19th and early 20th centuries — the data employed in discovery efforts was manageable ‘by hand’, even when using the newest mathematical and analytical methods emerging at the time. This remained true until the effective commercialization of machine computing ended the need for human computers as a recognized role in the middle of the 20th century.

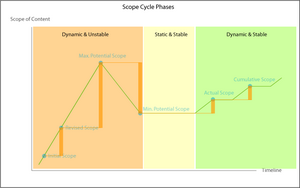

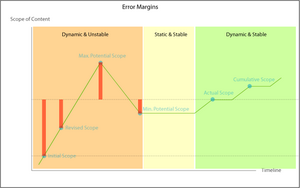

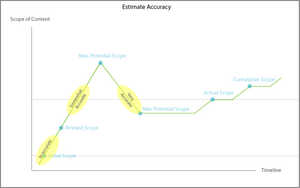

The reality of most analytical efforts — even those with good initial definition — is that insights often emerge in response to and in tandem with changing and evolving questions which were not identified, or perhaps not even understood, at the outset. During discovery efforts, analytical goals and techniques, as well as the data under consideration, often shift in unpredictable ways, making the path to insight dynamic and non-linear. Further, the sources of and inspirations for insight are difficult or impossible to identify both at the time and in retrospect. Empirical discovery addresses the complex and opaque nature of discovery with iteration and adaptation, which combine to set the stage for serendipity.

With this initial definition of Empirical Discovery in hand, the natural question is what this means for Data Science and business analytics? Three thigns stand out for me. First, I think one of the central roles played by Data Science is in pioneering the application of existing analytical methods from specialized domains to serve general business goals and perspectives, seeking effective ways to work with the new types (graph, sensor, social, etc.) and tremendous volumes (yotta, yotta, yotta…) of business data at hand in the Big Data moment and realize insights

Second, following from this, Empirical Discovery is methodological a framework within and through which a great variety of analytical techniques at differing levels of maturity and from other disciplines are vetted for business analytical utility in iterative fashion by Data Science practitioners.

And third, it seems this vetting function is deliberately part of the makeup of empirical discovery, which I consider a very clever way to create a feedback loop that enhances Data Science practice by using Empirical Discovery as a discovery tool for refining its own methods.

Related posts:

First up is

First up is